This educational blog presents a high-level overview of KVM-based virtualization environments for private clouds. For more complete and technical information on virtualization environments start with Lightbits Storage for OpenShift Virtualization.

What is KVM-based virtualization?

KVM-based virtualization refers to a data center architecture approach that utilizes Kernel-Based Virtual Machine (KVM) technology to create and manage VMs on a Linux host. This type of virtualization is popular for organizations seeking open-source, cost-effective virtualization alternatives to proprietary hypervisors like VMware. It’s commonly integrated into cloud platforms like OpenStack for building large-scale, multi-tenant environments. Key features include:

- Full Virtualization: Supports running multiple, unmodified operating systems simultaneously.

- Performance: It takes advantage of hardware virtualization features in modern CPUs.

- Flexibility: Works with various storage, networking, and virtualization management tools.

What is a Kernel-Based Virtual Machine?

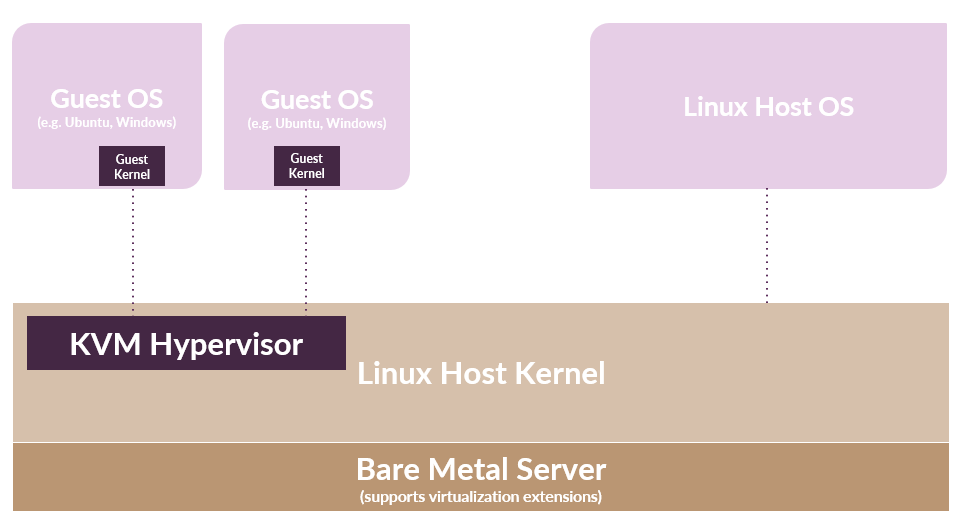

KVM is an open-source virtualization software that turns the Linux kernel into a hypervisor, enabling it to manage multiple VMs to run on the same physical server, each with its own operating system (OS) and resources. A VM is a software application that acts as an independent computer within another physical computer. KVM-based virtualization refers to the use of KVM technology to create and manage KVM Kernel-based virtual machines on a Linux-based system.

KVM is a Linux OS component that provides native support for VMs on Linux that was introduced in Linux kernel version 2.6.20, which was released in February 2007. Because KVM is integrated into the Linux kernel, it can leverage the kernel’s existing features for memory management, process scheduling, and hardware resource allocation to VMs and it immediately benefits from every new Linux feature, fix, and advancement without additional engineering.

What is a KVM hypervisor?

I’ll start by explaining a hypervisor in general terms. A hypervisor can be software, firmware, or hardware that creates and manages VMs in a virtualized environment. It acts as an intermediary between the physical hardware and the VMs, allocating resources (like CPU, memory, and storage) and managing the interaction between the physical host and the virtualized environments. A hypervisor can consolidate multiple VMs onto a single physical server making it an essential component for efficient resource utilization in data centers and why it’s a popular architecture model for cloud environments and enterprise IT.

There are two main types of hypervisors: Bare-Metal Hypervisors and Hosted Hypervisors. Bare-metal hypervisors run directly on the physical hardware of the host machine and deliver high performance and low latency since they interact directly with the hardware. KVM Hypervisor is a Bare-Metal Hypervisor as is VMware ESXi. Hosted Hypervisors run on top of a host OS which accesses the hardware resources. Hosted hypervisors are commonly used in desktop environments because they are easier to set up. An example of a hosted hypervisor is VMware Workstation.

In either case, the hypervisor’s primary role is to ensure that each VM runs in an isolated environment. This is important for several reasons such as security, the vulnerabilities of one VM will not affect others; resource allocation control; and stability and reliability, the performance issues of one VM (like memory leaks or CPU spikes) will not impact the others. The hypervisor also acts as an efficient method to allocate resources by dynamically distributing CPU, memory, storage, and networking resources. As well as managing operations by simplifying the creation, deletion, suspension, and migration of VMs.

A KVM hypervisor then is specific to the Linux OS enabling full virtualization capabilities. It provides each VM with all typical services of the physical system, including virtual BIOS (basic input/output system) and virtual hardware, such as processor, memory, storage, network cards, etc. As a result, every VM completely simulates a physical machine.

KVM Hypervisor benefits

Since KVM is part of Linux, it enables a straightforward user experience and smooth integration. However, KVM has greater benefits when compared to other virtualization technologies.

Performance – One of the main drawbacks of traditional virtualization technologies is performance degradation compared to physical machines. Since KVM is a bare-metal hypervisor, it outperforms all hosted hypervisors, ensuring near-metal performance. With KVM hypervisor VMs boot fast and achieve desired performance results.

Scalability – As a Linux kernel module, the KVM hypervisor automatically scales to respond to heavy loads once the number of VMs increases. The KVM hypervisor also enables clustering for thousands of nodes, laying the foundations for cloud infrastructure implementation.

Security – Since KVM is part of the Linux kernel source code, it benefits from the world’s biggest open-source community collaboration, rigorous development and testing process as well as continuous security patching.

Maturity – KVM has been actively developed since its introduction. It is a nearly two-decade project, presenting a high level of maturity. More than 1,000 developers around the world have contributed to the KVM code.

Cost-efficiency – cost is a driving factor for many organizations. Since KVM is open source and available as a Linux kernel module, it comes at zero cost out of the box. You can optionally subscribe to various commercial programs, such as Ubuntu Pro to receive enterprise support for KVM-based virtualization environments.

Why is KVM Important?

KVM’s powerful combination of performance, security, flexibility, and cost-efficiency makes it a critical tool in modern virtualization strategies, making it a popular choice for server consolidation in data centers and cloud environments.

Because KVM turns any Linux machine into a bare-metal hypervisor it allows DevOps to scale computing infrastructure without investing in new hardware. It also frees server administrators from manually provisioning virtualization infrastructure and allows large numbers of VMs to be deployed easily in cloud environments.

KVM Features

Here’s why KVM virtualization is significant:

- Open Source and Cost-Effective: You can implement virtualization without the licensing costs associated with proprietary hypervisors, like VMware. This significantly reduces the total cost of ownership for virtualization infrastructure.

- High Performance and Efficiency: As a bare-metal hypervisor embedded directly in the Linux kernel, it allows virtualization to be performed as close as possible to the server hardware, which reduces latency. And it leverages hardware virtualization extensions like Intel VT-x and AMD-V for high performance. KV efficiently handles I/O operations, resource allocation, and workload balancing, making it suitable for performance-intensive applications like real-time analytics, high-performance databases, and AI/ML.

- Scalability for Cloud and Enterprise: KVM is highly scalable, supporting everything from single-server setups to complex cloud environments. It powers many cloud infrastructure solutions, including OpenStack, because it allows for easy scaling of resources and efficient VM management across large data centers.

- Security and Isolation: KVM provides strong VM isolation, ensuring that each virtual machine operates independently, which is essential for multi-tenant environments. Its isolation capabilities limit the impact of vulnerabilities, helping contain potential security threats and ensuring compliance. KVM inherits security features native to the Linux OS, such as Security-Enhanced Linux (SELinux). This ensures that all virtual environments strictly adhere to its respective security boundaries to strengthen data privacy and governance.

- Compatibility with Linux Tools: As a part of the Linux ecosystem, KVM integrates seamlessly with other Linux management tools and technologies like libvirt for VM management, which simplifies administration. Also, KubeVirt can extend Kubernetes to support virtualized workloads by enabling the deployment and management of VMs alongside containerized applications. KubeVirt relies on KVM as the underlying hypervisor to run VMs on each Kubernetes node. The Linux kernel on each node uses KVM to create isolated, high-performance VMs directly within the Kubernetes cluster.

- Flexible Resource Allocation: KVM allows for dynamic and efficient resource allocation, letting administrators assign specific amounts of CPU, memory, storage, and network bandwidth to each VM. This flexibility optimizes hardware utilization, saving costs and energy.

- Future-Ready and Well-Supported: Backed by the Linux community and integrated into the kernel, KVM receives regular updates, new features, and patches, ensuring it remains secure, future-ready, and aligned with industry advancements.

How Does KVM Work?

KVM is built into the Linux kernel, enabling it to act as a hypervisor. Linux kernel is the core of the open-source OS and a program that interacts with computer hardware. It ensures that software applications running on the OS receive the required computing resources. Linux distributions, such as Red Hat Enterprise Linux, Fedora, and Ubuntu, pack the Linux kernel into a user-friendly commercial OS.

Kernel-based virtual machines have all the needed OS-level components–memory and security managers, process scheduler, I/O and network stack, and device drivers–to run VMs. It requires a Linux kernel installation on a computer powered by a CPU that supports virtualization extensions, such as Intel VT-x or AMD-V, to provide efficient, high-performance virtualization. Each VM runs its own isolated operating system (like Linux, Windows, etc.), and the host OS (which must be Linux) controls these VMs through the KVM module. This allows for multiple independent environments to coexist on the same hardware.

KVM-based Virtualization Architecture

Why Use OpenStack and KVM Together?

OpenStack and KVM are often used together and are a powerful combination for building and managing private or hybrid cloud infrastructures. In an OpenStack cloud, KVM provides the virtualization layer, allowing OpenStack to spin up and manage VMs efficiently. KVM enables resource isolation, meaning multiple VMs can run on the same physical host without interfering with each other. OpenStack uses KVM to create scalable, flexible, and multi-tenant cloud environments, making it an ideal solution for organizations and service providers wanting to build private or hybrid clouds.

Why Use OpenShift and KVM Together?

While OpenShift primarily manages containerized workloads with Kubernetes, it can also work alongside KVM to deploy and manage VMs within the same environment. This is typically enabled via KubeVirt. KubeVirt is a Kubernetes extension that enables the management of VMs as Kubernetes-native resources. By deploying KubeVirt on OpenShift, you can use KVM to run VMs alongside containerized workloads. This is useful for applications that require a combination of virtualized and containerized environments or for legacy applications that aren’t easily containerized.

What is the Difference Between KVM and VMware?

KVM and VMware are both virtualization technologies but differ significantly in architecture, licensing, and use cases. Both provide virtualization infrastructure to deploy bare-metal hypervisors on the Linux kernel. However, KVM is an open-source feature while VMware is available via commercial licenses.

KVM is designed for Linux-based, open-source environments and is often used in cloud and data center setups that prefer open-source solutions, such as OpenStack. KVM virtual machines are suitable for organizations seeking flexibility, customized setups, and lower costs. Because it’s a component of the Linux kernel, it takes advantage of Linux’s scheduler and memory management, making it highly efficient and can deliver near-native performance.

On the other hand, VMware is a proprietary, commercial product that can be used as either a bare-metal (e.g. ESXi) or hosted hypervisor. Managed through vCenter, VMware offers various tiers and features tailored to your organization’s needs, such as advanced monitoring, disaster recovery, and automation. It offers native features for high availability, disaster recovery (DR), and fault tolerance within the vSphere suite, providing easier configuration and robust options for enterprise users. The mature resource management tools can make it a costlier option, but it dominates in enterprise environments with strict SLAs, high availability, and where premium support is required. It’s ideal for organizations that need robust management features and established vendor support.

KVM is a cost-effective, open-source solution best suited for Linux environments and organizations favoring open-source stacks. VMware provides a commercial virtualization suite optimized for enterprise environments that require premium support and ease of management.

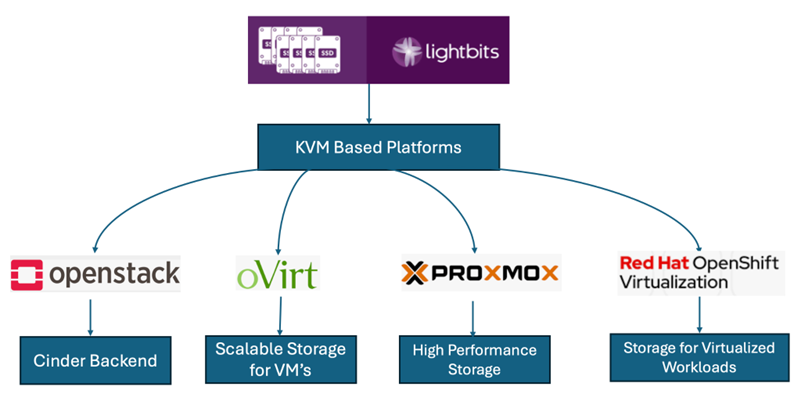

How Does Software-Defined Storage Work with KVM?

Lightbits is a simpler block storage solution for KVM-based virtualization integrating seamlessly with KVM environments allowing storage resources can be pooled and accessed by multiple VMs without being tied to specific physical devices. The flexible provisioning capabilities of Lightbits storage means that KVM can quickly allocate and deallocate storage based on the needs of VMs, improving efficiency and reducing downtime during storage adjustments. Additionally, Lightbots block storage is dynamically scalable; as workloads grow, storage can be easily expanded by adding more nodes without disrupting existing services. This flexibility is particularly important in KVM environments, which often need to handle varying loads and resource demands.

At Lightbits, we believe in the power of open source. That’s why we’ve developed an open-source Container Storage Interface (CSI) plugin that integrates seamlessly with OpenShift Virtualization. This plugin allows you to easily provision and manage high-performance persistent storage for your VMs, making deployment and scaling a breeze. With our CSI plugin, you can leverage the full power of Lightbits storage within your cloud environment, ensuring that your virtualized workloads have access to the performance and features they need.

The Lightbits OpenStack Cinder driver enables seamless integration into your cloud while high availability, reliability, and QoS ensure consistent user experience, making Lightbits the fastest most scalable OpenStack storage solution.

Lightbits is a software-defined storage solution designed for high performance, scale, efficiency, and flexibility. Lightbits is architected from the ground up to deliver the speed and efficiency of direct-attached SSDs with the flexibility and manageability of networked storage. We’re the inventors of NVMe® over TCP, a protocol that allows you to get near-local NVMe performance over standard TCP/IP networks. This means you can have your cake and eat it too – enjoy the performance of local SSDs with the scalability and ease of management of networked storage, all without specialized networking hardware.

Ready to supercharge your KVM virtualization environment with Lightbits? Contact us today for a personalized demo and see the difference for yourself!