Has the emergence of flash storage pushed open source software-defined storage Ceph past its prime? The answer is, as often happens when dealing with complex systems, “It depends”.

A Brief History of Ceph Storage

When Ceph Storage burst upon the computing scene it was hailed for its groundbreaking RADOS algorithm, enabling any compute client to find the location of any piece of data in one hop. It provided both block and object storage (and later also file) on a single, distributed cluster. Ceph is prized for its use of commodity hardware as it replicates and makes data fault-tolerant, its self-management and self-healing capabilities, and its ability to save users time and money. Because Ceph is scalable to the exabyte level and designed to have no single points of failure, it shines in applications requiring highly available, flexible storage.

Bringing on the Flash

Ceph’s influence today remains widespread yet it is showing some signs of age in certain use cases. This is largely because Ceph was designed to work with hard disk drives (HDDs). In 2005, HDDs were the prevalent storage medium, but that’s all changing now.

If we look at the response time of HDDs in 2005 the rated response time was about 20ms, but competing IO loads usually drove that latency higher. If the CEPH lookup took 1ms (for example only), that was only 5% of the latency of the media and made little difference to overall response. When we look at NVMe drives with read latencies of about 100us, that lookup overhead is now 10X the latency of the media! This means, quite simply, you can’t get the full value out of your flash investment when accessing via Ceph.

Today, most enterprises are shifting to flash storage for its performance and maintenance advantages. Next generation storage solutions such as our software-defined storage solution, Lightbits, are designed natively for flash storage and the needs of the modern data center.

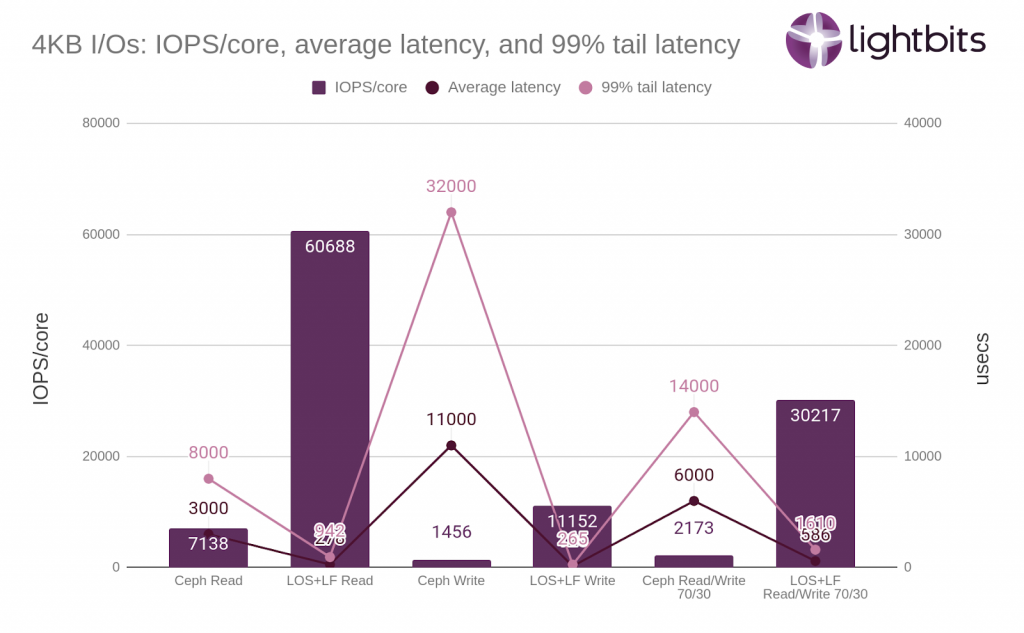

This is by no means an indictment of Ceph: It was a revolutionary offering that became the venerable standard solution for more than a decade. But now, with exploding amounts of data to deal with, many enterprises are looking for greater performance and lower latencies than Ceph can provide. See, for example, the chart below:

As can be seen in the chart, Lightbits provides 14x better IOPS/core, 87x better average latency, and 305x better 99th percentile tail latency when compared with Ceph. (Full details on the setup and comparison methodology are available in the Lightbits whitepaper “Comparing LightOS and Ceph for Primary Block Storage”)

This is because in some respects, Ceph is showing its age: it does not use resources as efficiently as more modern solutions; it is resource hungry and requires many dedicated servers. More modern solutions, such as LightOS, can do the same job with a fraction of the server cores required by Ceph. In fact, on a pure CPU core basis, we have tested LightOS on just one CPU handling between 14 and 33 times the amount of work that Ceph can do using the same resources.

Much of those speed gains are because flash is faster than HDD, but also because Ceph requires multiple versions of data for storage. In fact, Ceph often leaves customers with a resource-intensive 4 or 5 replicas of the data to ensure the performance reliability they need. With LightOS and erasure coding, only 2 or 3 copies of the data is required, freeing up lots of additional storage and compute resources.

The Reality

You’ll get no argument from me that Ceph still has its place, particularly with large-capacity, HDD-based workloads that are less performance-sensitive. But as time passes we likely will see it being phased out in industries that require high-performance computing, such as the back-end area of retail, financial services, cloud services, private clouds, artificial intelligence applications, machine learning applications, healthcare, media and entertainment, among others.

A decade ago, Ceph was all about making data flexible, scalable and affordable. Today, emerging solutions are taking up that mantra – and doing it with lower-cost and easily scalable flash.

Looking to learn more? Join our webinar,

Additional Resources

Ceph Storage [A Complete Explanation]

Is It Time to Retire Ceph Storage for NVMe?

Disaggregated Storage

Kubernetes Persistent Storage

Edge Cloud Storage

NVMe over TCP

NVMe Storage Explained