In today’s competitive business environment, cloud hosting for enterprise-level deployments requires a highly scalable storage solution to streamline as well as manage important business data. With technology and best practices fast moving towards cloud-based services to keep pace with evolving businesses, Ceph Storage (Ceph) emerged as a solution to fulfill the need for a software storage solution that encourages a very sustainable model for growth.

In this blog, we outline various aspects of Ceph and how it fulfills the demanding storage needs of businesses.

Overview

What is Ceph Storage?

Understanding the Working Of Ceph Block Storage

Ceph Storage Architecture

NVMe/TCP Support for Ceph

Ceph Storage Performance

Use Cases for Ceph Block and Ceph Object Storage

Advantages and Disadvantages Of Ceph Storage

Ceph Storage Vs AWS S3- Features and Key Differences

Why is Ceph Storage Not Enough for Modern Workloads?

What is Ceph Storage?

Ceph is an open-source, software-defined platform employing a distributed object storage architecture designed for scalability, flexibility, and fault tolerance on commodity infrastructure.

Originally intended for spinning hard disk drives (HDDs), Ceph has incrementally evolved its architecture over the past decade to take advantage of flash storage advances. Recent versions of the software utilize solid-state drives (SSDs) for metadata operations, improving performance. However, many core design elements optimizing HDD behavior remain unchanged.

Ceph aims to provide unified storage, with object, block, and file interfaces in a single platform. The block storage service (RBD) presents virtual block devices to applications. A key benefit of Ceph’s architecture is leveraging commodity hardware to scale capacity across thousands of nodes. However, scaling capacity can substantially increase demands on the supporting cloud network fabric and has been shown to introduce latency with larger-scale private cloud environments.

As a powerful storage solution, Ceph uses its own Ceph file system (CephFS) and is designed to be self-managed and self-healing. It is equipped to deal with outages on its own and constantly works towards reducing administration costs.

Another highlight of Ceph is that it is quite fault-tolerant and becomes so by easily replicating data. What this means is that there are no bottlenecks as such in the process while Ceph is operating.

Among some of the latest features of Ceph are:

– High scalability

– Open-source

– High reliability through distributed data storage

– Robust data security through redundant storage

– Advantage of continuous memory allocation

– Convenient software-based increase in availability via an integrated algorithm for locating data

Understanding the Working Of Ceph Block Storage

Ceph primarily uses a Ceph block device, a virtual disk that can be attached to virtual machines or bare-metal Linux-based servers.

One of the key components in Ceph is RADOS (Reliable Autonomic Distributed Object Store), which offers powerful block storage capabilities such as replication and snapshots which can be integrated with OpenStack Block Storage.

Apart from this, Ceph also uses POSIX (Portable Operating System Interface), a robust Ceph file system to store data in their storage clusters. The advantage is that the file system uses the same clustered system as Ceph block storage and object storage to store a massive amount of data.

Ceph Storage Architecture

Ceph needs several computers connected to one another in what is known as a cluster. Each of these connected computers within that network is known as a node.

Below are some of the tasks which must be distributed among the nodes within the network:

– Monitor nodes (ceph-mon): These cluster monitors are mainly to monitor the status of individual nodes in the cluster, especially the object storage devices, managers and metadata servers. To ensure maximum reliability, it is recommended to have at least three monitor nodes.

– Object Storage Devices (ceph-osd): Ceph-OSDs are background applications for actual data management and are responsible for storage, duplication, and data restoration. For a cluster, it is recommended to have at least three -ODSs.

– Managers (ceph-mgr): They work in tandem with ceph monitors to manage the status of system load, storage usage, and the capacity of the nodes.

– Metadata servers (ceph-MDS): They help to store metadata such as file names, storage paths and timestamps of files stored in the CephFS for several performance reasons.

The crux of the Ceph data storage is an algorithm called CRUSH (Controlled Replication Under Scalable Hashing) that uses CRUSH Map-an allocation table- to find an OSD with the requested file. CRUSH chooses the ideal storage location based on fixed criteria, post which of the files are duplicated and then subsequently save them on physically separate media. The administrator of the network can set the relevant criteria.

The base of the Ceph data storage architecture is known as RADOS, a completely reliable, distributed object store composed of self-mapping, intelligent storage nodes.

Among some of the ways to access stored data in Ceph include:

– radosgw: In this gateway, data can either be read or written using the HTTP Internet protocol.

– librados: Native access to stored data is possible using the librados software libraries through APIs in programming and different scripting languages including Python, Java, C/C++ and PHP.

– RADOS Block Device: Data access here requires integration using a virtual system such as QEMU/ KVM or block storage through a kernel module.

NVMe/TCP Support for Ceph

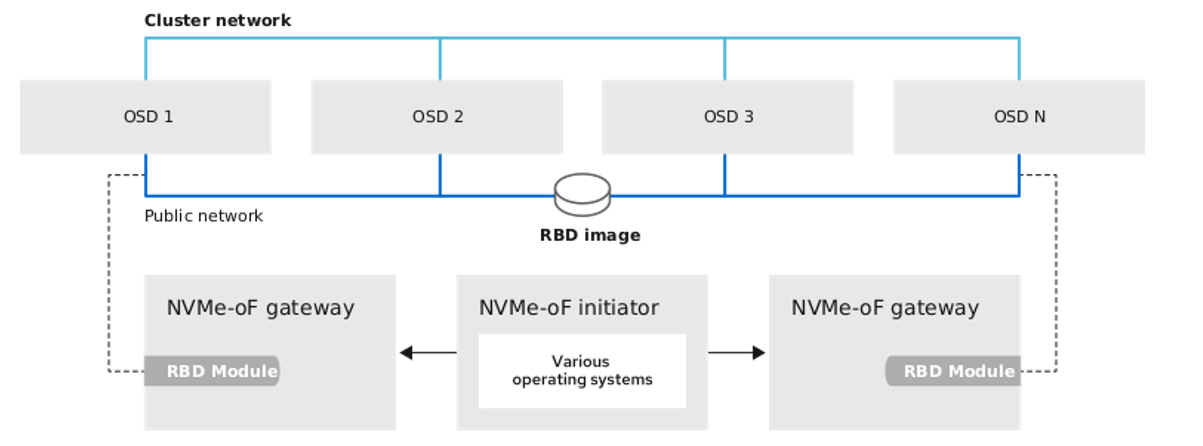

Ceph recently unveiled a technology preview for NVMe/TCP connectivity. However, this implementation involves the integration of Ceph’s NVMe-oF gateway. Figure 2 illustrates how the gateway exports RADOS Block Devices (RBD) to clients over NVMe/TCP. This model introduces additional architectural complexity, leading to bottlenecks and increased storage networking latency caused by the extra hop and protocol translation.

While Ceph strives to support modern high-speed protocols such as NVMe/TCP, the current approach involves the use of protocol gateways and translation layers atop the existing Ceph architecture. This model may improve Ceph’s interoperability, but it deviates from the originally intended design of NVMe/TCP fabric architectures. This design is implemented by Lightbits, which is meticulously engineered to offer direct and high-performance host connectivity.

Ceph NVMe-oF gateway from IBM Storage Ceph product documentation, “Ceph NVMe-oF gateway (Technology Preview)”

Ceph Performance

Ceph brings various benefits to OpenStack-based private clouds. To understand Ceph storage performance better, here is a look at a few of them.

High availability & enhanced performance

The coding erasure feature of Ceph improves data availability manifolds simply by adding resiliency and durability. At times, the writing speeds can almost be twice as high as the previous backend.

Robust security

Active directory integration, encryption features, LDAP etc., are some of the highlights in place with Ceph which can limit unwanted access into the system.

Seamless adoption

Making a shift to software-defined storage platforms can sometimes be complicated. Ceph overcomes the issue by allowing block and object storage in the same cluster without you having to worry about administering separate storage services using other APIs.

Cost-effectiveness Ceph runs on commodity hardware, making it a cost-effective solution without needing any expensive and extra hardware.

Use Cases of Ceph Block and Ceph Object Storage

Ceph was primarily designed to smoothly run on general-purpose server hardware. It supports elastic provisioning making it economically feasible to build and maintain petabyte-to-exabyte scale data clusters.

Unlike several other mass storage systems that are great at storage but quickly run out of throughput or IOPS before they run out of capacity, Ceph independently scales performance and capacity, enabling it to support varied deployments optimized for a specific use case.

Some of the most common use cases for Ceph Block & Object Storage are listed below:

Use cases of Ceph Block

– Deploy elastic block storage with on-premise cloud

– Storage for smooth running VM disk volumes

– Storage for SharePoint, Skype and other collaboration applications

– Primary storage for MY-SQL and other similar SQL database apps

– Dev/Test Systems

– Storage for IT management apps

Use cases of Ceph Object Storage

– VM disk volume snapshots

– Video/audio/image repository services

– ISO image store and repository service

– Backup and archive

– Deploy services similar to Dropbox within the enterprise

– Deploy Amazon s3 object store like services with on-premise cloud

Advantages and Disadvantages Of Ceph

While Ceph storage is a good choice in many situations, it comes with some disadvantages too. Let’s discuss both in this section.

Advantages

– Despite its limited development history, Ceph is free and is an established storage method.

– The application has been extensively and well-documented by the manufacturer.

– A great deal of helpful information is available online for Ceph regarding its setup and maintenance.

– The scalability and integrated redundancy of Ceph storage ensure data security and flexibility within the network.

CRUSH algorithm of Ceph ensures high availability.

Disadvantages

– To be able to fully use all of Ceph’s functionalities, a comprehensive network is required due to the variety of components being provided.

– The set-up of Ceph storage is relatively time-consuming, and sometimes the user cannot be entirely sure where the data is physically being stored.

– It requires additional engineering oversight to implement and manage

Ceph vs AWS S3- Features and Key Differences

In this section, we will briefly compare two popular object stores that are AWS S3 and Ceph Object Gateway (RadosGW). We will keep the focus on common features and some of the key differences.

While Amazon S3 (released in 2006) is primarily a public object store provided by AWS and guarantees robust 99.9% availability of objects, Ceph storage is an open-source software that offers distributed object, block and file storage.

Operated under the LGPL 2.1 license, the Ceph Object Gateway daemon (released in 2006) offers two different sets of APIs-

1. One that is compatible with a subset of the Amazon S3 RESTful APIs

2. Second, which is compatible with a subset of the OpenStack Swift API.

One of the key differences between S3 and Ceph Storage is that while Amazon S3 is a proprietary solution available only on Amazon commercial public cloud (AWS), Ceph is an open-source product that can be easily installed on-premises as a part of a private cloud.

Another difference between the two is that Ceph provides strong consistency, which means that a new object and changes to an object are guaranteed to be visible to all of the clients. On the contrary, Amazon S3 offers read-after-write consistency for creating new objects and eventual consistency for both updating and deleting objects.

Why is Ceph Not Enough for Modern Workloads?

While there is no denying the fact that Ceph is highly scalable and a one-size-fits-all solution, it has some inherent architectural loopholes. Ceph storage is not suitable for modern workloads for the following reasons:

1. Enterprises either working with the public cloud, using their own private cloud or moving to modern applications require low latency and consistent response times. While BlueStore (a back-end object store for Ceph OSDs) helps to improve average and tail latency to an extent, it cannot necessarily take advantage of the benefits of NVMe® flash.

2. Modern workloads typically deploy local flash, (local NVMe® flash), on bare metal to get the best possible performance and Ceph is not equipped to realize the optimized performance of this new media. In fact, Ceph in a Kubernetes environment where local flash is recommended, can be an order of magnitude slower than local flash.

3. Ceph has a comparatively poor flash utilization (15-25%). In case of a failure with Ceph or the host, the rebuild time for shared storage needs can be very slow because of massive traffic going over the network for a long period.

To learn more about Ceph performance in a Kubernetes environment, read the “Kubernetes Assimilation: Persistence is Futile” blog.

Conclusion

With data taking the center stage of almost every business, picking the right storage platform is becoming increasingly important. Ceph storage is designed to make your data more accessible to you and your business applications.

However, despite Ceph being a good choice for applications that are fine with spinning drive performance, it has certain architectural shortcomings that make it non-suitable for high performance, scale-out databases, and other similar web-scale software infrastructure solutions.

Lightbits, a software-defined storage solution for NVMe® over TCP, is an excellent alternative for Ceph storage especially when simplicity, cost-optimization, low latency and consistent performance are the priorities. Apart from being super resilient, it offers rich data features not typically associated with NVMe®, such as optional compression and thin provisioning.

Lightbits also works great with various cloud-native application environments because of several plugins for OpenStack (Cinder) and Kubernetes (CSI), and it can also be used on bare metal while offering robust scalability at the same time.